Verifiable AI with Self-Sovereign Identity, a Socio-Technical Exploration

27 February - 24 June 2025

Principal Investigator: Nicky Hickman (Cheqd)

Co-Investigators: Ankur Banerjee, Javed Khattak (Cheqd)

Event attendees: 84

Project overview

Use of artificial intelligence and autonomous systems such as AI agents is increasing at a rapid pace in many spheres. This poses interesting questions about how we design, develop and manage for the emerging cyber-physical society and economy.

The primary challenge is enabling high-quality data access while respecting data protection, competition, and privacy laws. One solution to this is enabling AI to operate at the edge and under the control of individuals and their agents so that they can exchange verifiable data signals using verifiable credentials, with service providers and peers. This exploration sought to answer the question:

‘How should we design , build and operate AI Agents for healthy and trustworthy relationships with humans and the natural world?’

By collaborating together social scientists and technologists were able to concretely demonstrate that using existing self-sovereign identity technologies, and taking a decentralized relational perspective more trustworthy, secure and privacy-preserving AI systems can be created.

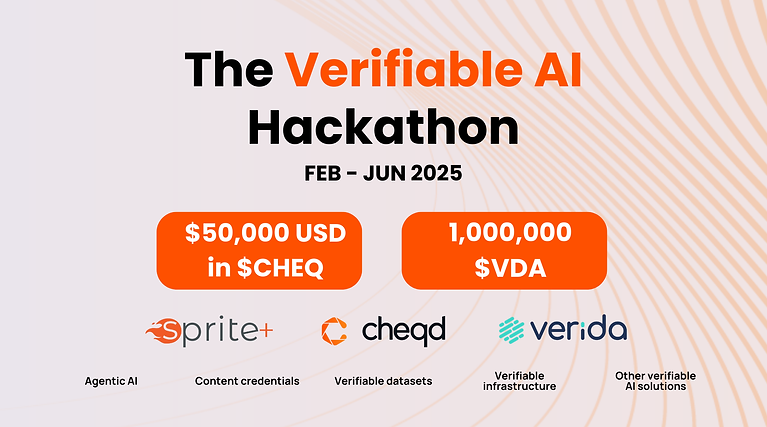

The exploration ran alongside a technical hackathon hosted by DoraHacks ‘Verifiable AI, Save the World with cheqd’ resulting in several innovative and functioning Verifiable AI solutions used as real-world implementation examples in the Relationship Guides. You can see who the winners were here and watch the Demo Day here.

The SPRITE+ investment in the social design track enabled us to experiment with several design methods resulting on a thesis on use of AI agents in a participatory design process, overcoming traditional challenges associated with cost and complexity.

Project outputs

Outputs: Two practical guides for designers and developers of autonomous systems.

1) Authenticity, Authorisation and Authority: A Human-Centred Relationship Guide for AI Agents & their Creators

Ideal for those developing personalised AI agents with human users, and those practicing human-centred design where the AI agent is regarded as a tool or servant of humans.

2) Authenticity, Authorisation and Authority: An Entangled Relationship Guide for AI Agents & their Creators

For those developing systems with machine (IoT) or industrial users, environmental applications or those whose cultural perspectives regard AI agents, the natural world and all entities as collaborators and cooperators with humans.

The exploration ran alongside a technical hackathon resulting in several innovative and functioning Verifiable AI solutions used as real-world implementation examples in the Relationship Guides.

An experience report on the novel methodology used in the exploration was also produced entitled: Entangling Design: An Experience Report on Co-Creative Socio-Technical Design for Verifiable AI.

All documents are licensed under a Creative Commons Attribution 4.0 License and are publicly available for others to use and improve. You can find them here: https://cheqd.io/lp/verifiable-ai-social-design

Upcoming Events:

Nicky invited to present the Guides at the industry Smartex Future Technology Forum on 9th September 2025